Containerization

The following is a project that I completed in January 2024. It's was an exploration of Containers and AWS. The objective was to take a simple application from code all the way to loading it into a k8ns cluster in AWS.

This resulted in some great material around containers, and building containers.

The full PowerPoint can be found here:

History and Evolution

Centralized to Distributed to Centralized

Computing has evolved from single user single machine, to multi-user, to distributed, and is being consolidated back into data centers. We had big single user machines that turned into big multi-user mainframes. This is where UNIX and Linux ultimately came from. We then went distributed to everyone having a computer and a server on there local network. We are not in a cycle of consolidating those servers back into data centers.

The Latest Technology (1940’s)

In colloquial usage, the terms "Turing-complete" and "Turing-equivalent" are used to mean that any real-world general-purpose computer or computer language can approximately simulate the computational aspects of any other real-world general-purpose computer or computer language.

Emulation (1980's)

Microsoft wrote BASIC on an 8080 emulator using a DEC PDP-8.

Virtualization

Modern virtualization stems from emulation - we are effectively emulating the CPU and hardware the software runs on. Most servers in classic bare metal models would only be utilized to 25%, 50%, but never up to 100% meaning the remaining utilized capacity is a waster of money, power, and resources.

Virtualization has the following benefits:

- Better utilization approaching 100%

- Separation and clear boundaries between software packages by no longer sharing operating systems

- Abstracting the system from the hardware making moving to new hardware a simple file copy

New Problem

Emulating all hardware is inefficient as computing cycles are used to provide the basic function of the hardware it’s self.

Solution

Hardware and software working together allows the guest system to use the underlying hardware to simulate and separate different ‘virtual computers.’ While this gives a good performance increase bringing the virtualized performance closer to the bare metal performance, it requires that the system being virtualized by compatible with the underlying hardware.

Architecture

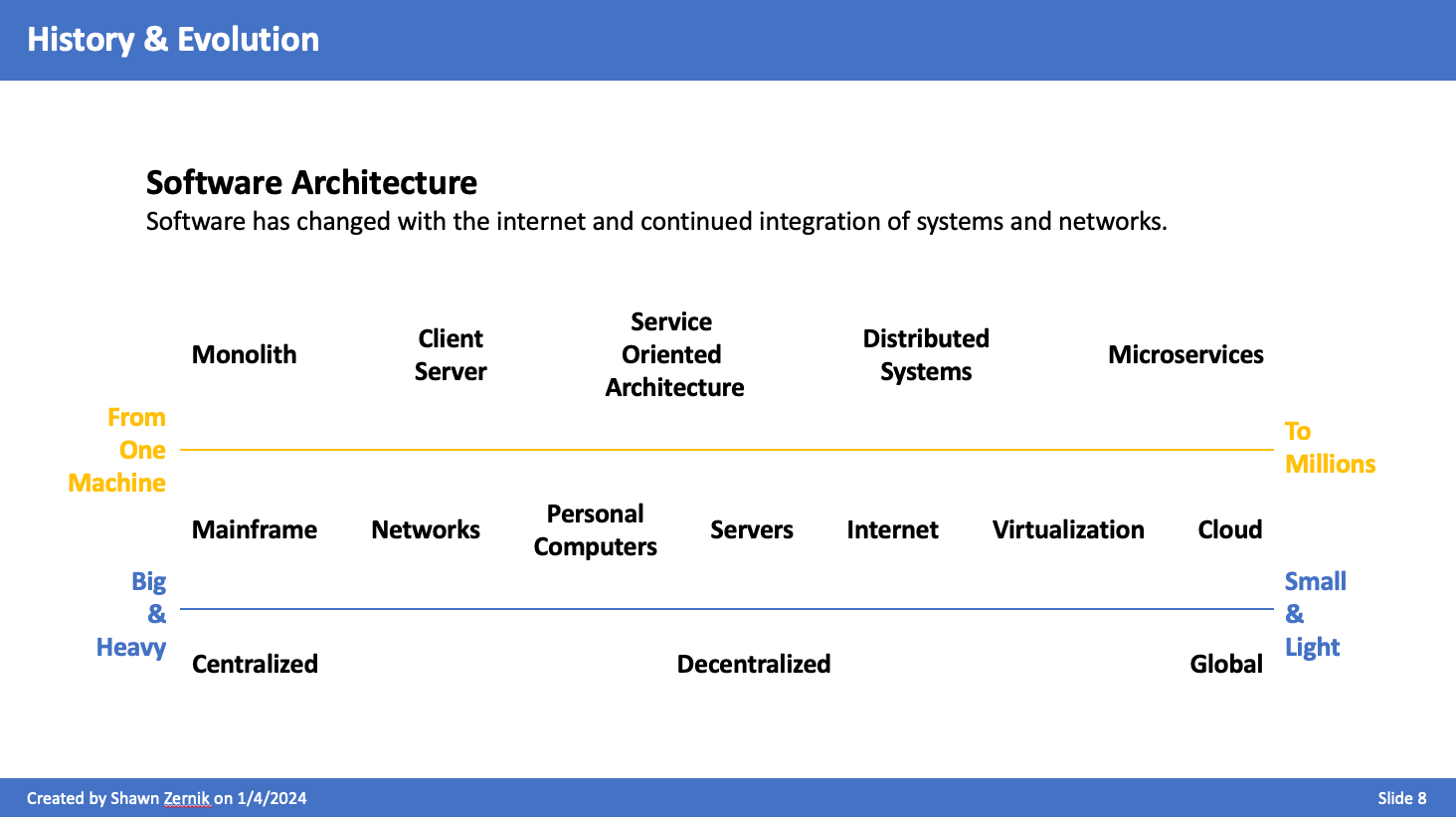

As we've gone form centralized to distributed to centralized, these cycles bear similarities, but lessons learned, lower costs, and more powerful hardware has changed the landscape. Software has changed with the internet and continued integration of systems and networks.

The Next Problem

Virtualization is heavy: we are running 1000s of operating systems; computing resources spent running the same operating system over and over; memory and disk space wasted; human effort to manage and orchestrate.

Solution

The solution started in the 1970’s in the UNIX world. A system called ‘chroot’ would isolate the filesystem. This isolation, also known as sandboxing, continued to sandboxing application execution space. This allowed applications to safely be separated from other applications for testing and security reasons in UNIX like environments.

Ultimately this led to containerization. Rather than virtualize the hardware and spin up another copy of the operating system, we can reuse parts of the operating system in a secure manner that looks like its own system.

- https://www.opensourceforu.com/2016/07/many-approaches-sandboxing-linux/

- https://medium.com/an-idea/a-brief-history-of-container-virtualization-57fc96c02924

From Physical to Containers

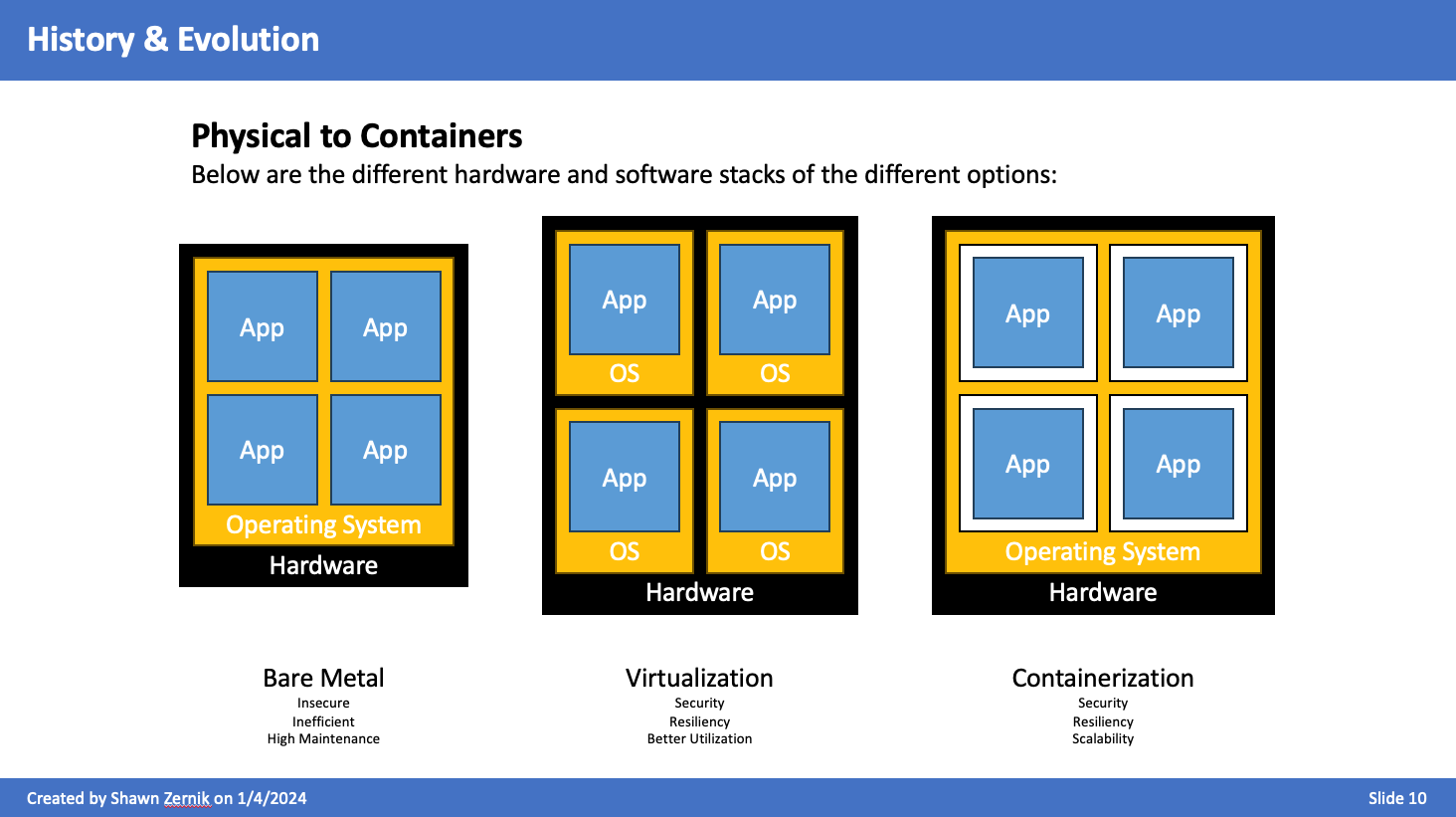

On a bare metal system, everything is together. The OS is configured for a specific hardware with specific drivers - impossible to move. If an application or OS goes haywire, their is no separation so it all goes down.

When virtualizing, it solved those problems, but the OS requires 1 to 2GB of RAM for Windows, 1-2CPU minimum, and 40GB HDD. This is per application to get full isolation. This gets expensive.

Containerization is the next iteration in lighter weight isolation. It looks like it's own computer, it secure - that's where the technology started.

Container Runtimes

Docker was the first to market and is synonymous with "containers". But their exists and open standard for containers, and their are free alternatives.

Open Container Initiative

The Open Container Initiative is an open governance structure for the express purpose of creating open industry standards around container formats and runtimes.

Established in June 2015 by Docker and other leaders in the container industry, the OCI currently contains three specifications: the Runtime Specification (runtime-spec), the Image Specification (image-spec) and the Distribution Specification (distribution-spec). The Runtime Specification outlines how to run a “filesystem bundle” that is unpacked on disk. At a high-level an OCI implementation would download an OCI Image then unpack that image into an OCI Runtime filesystem bundle. At this point the OCI Runtime Bundle would be run by an OCI Runtime.

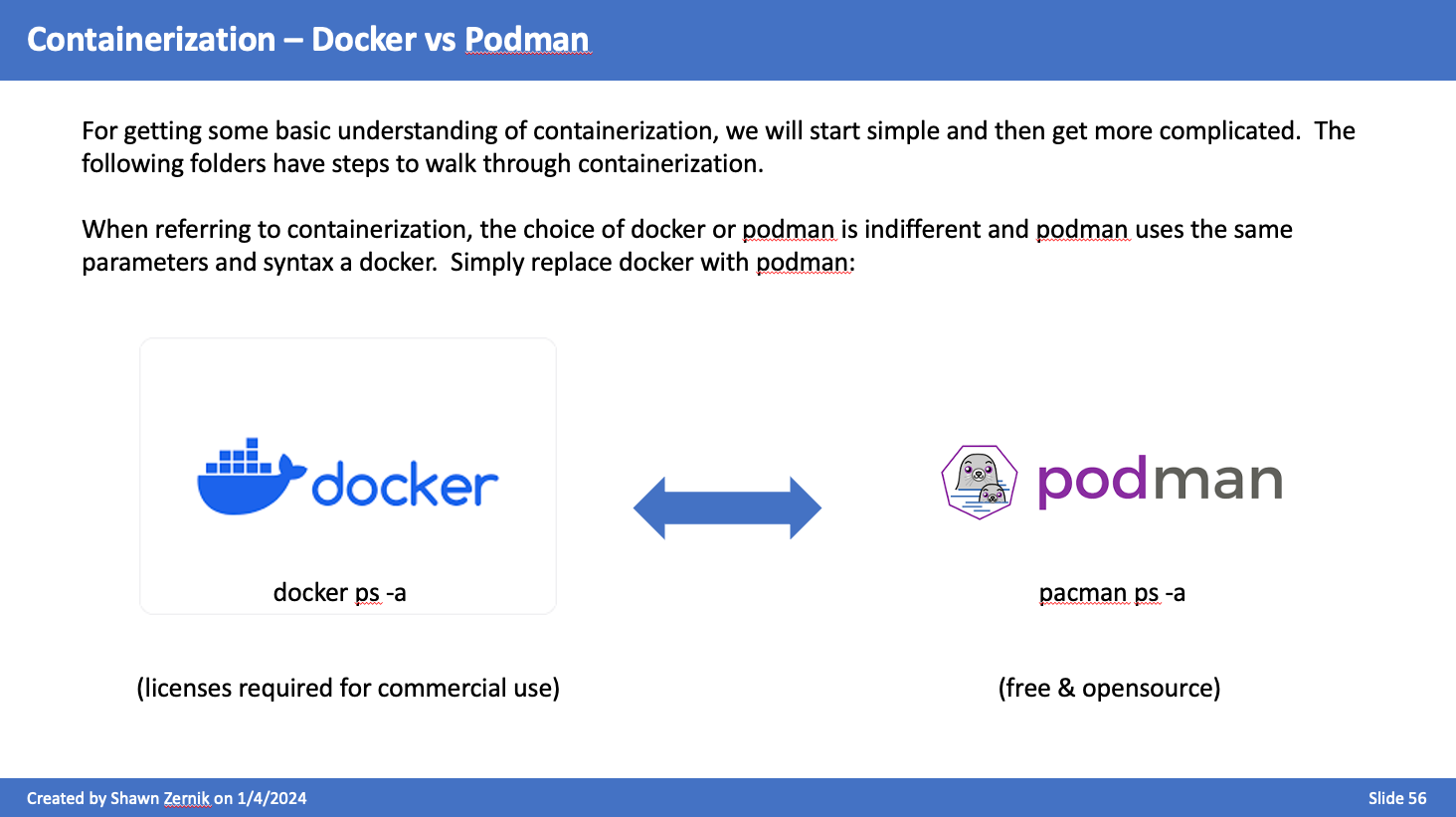

Docker vs Podman

For getting some basic understanding of containerization, we will start simple and then get more complicated. The following folders have steps to walk through containerization.

When referring to containerization, the choice of docker or podman is indifferent and podman uses the same parameters and syntax a docker. Simply replace docker with podman:

Networking

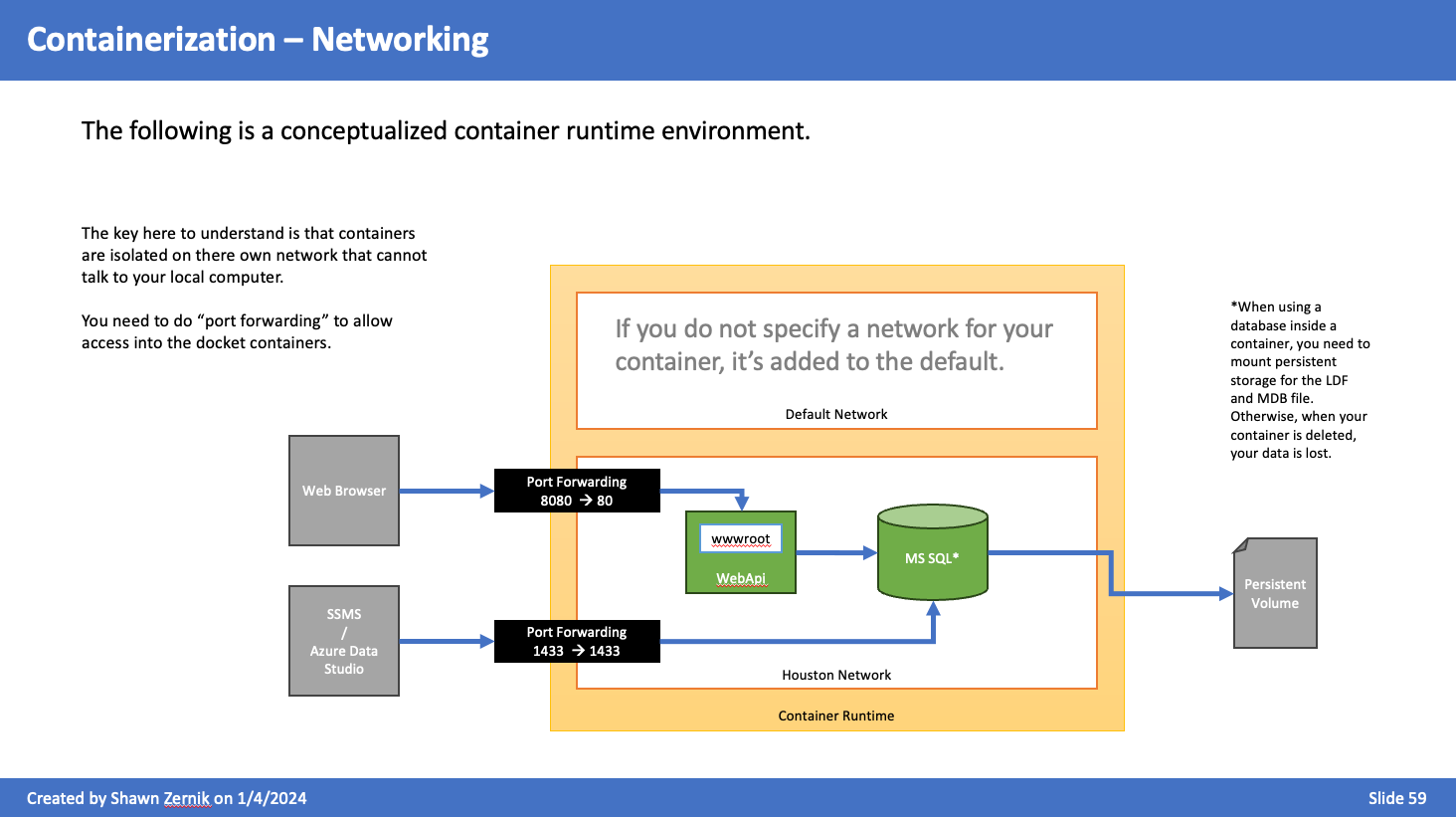

The following is a conceptualized container runtime environment. The key here to understand is that containers are isolated on there own network that cannot talk to your local computer.

You need to do “port forwarding” to allow access into the docket containers.

For the next steps, we will explore containers and networking. First, we will discuss networking and then containers. Again, when we don’t specify a network, container engine will use the “default” network. For most instances, we will want to isolate our applications and workload on their own network.

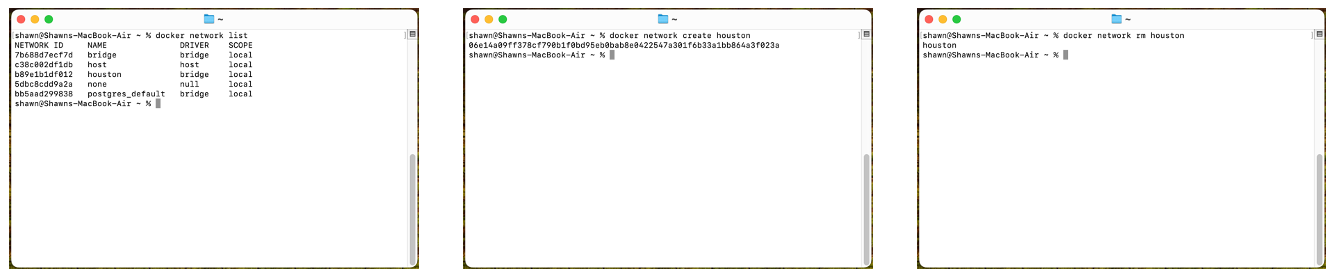

List Network

docker network list

The network type we typically use is “bridge”. Bridge is a fancy network term for router, and for our routing, we’ll use NAT.

Create Network

docker network create houston

If we don’t specify a network type, it’ll give us a bridge. The output on success is a big long SHA hash. When referencing by hash, you must only type the first part to the point of uniqueness - otherwise you’ll target many hashes.

Delete Network

docker network rm houston

This will delete the network named “Houston”. The output on success is simply the network name.

Working with Images

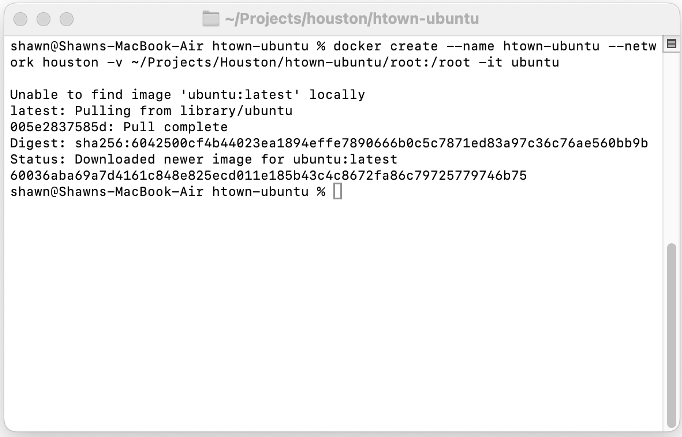

Next, we will create an ubuntu container from the standard ubuntu image. This container will map the “/root” folder to a location on your drive.

Create Container

docker create \

--name htown-ubuntu \

--network houston \

-v ~/Projects/Houston/htown-ubuntu/root:/root \

-i \

-t \

ubuntu

Paramters:

create– tells docker to create a container--name– name of the container--network– network to attach the image to-v– mount local folder to a path inside of counter-i– interactive-t– use tty (terminal / console)ubuntu– image to use

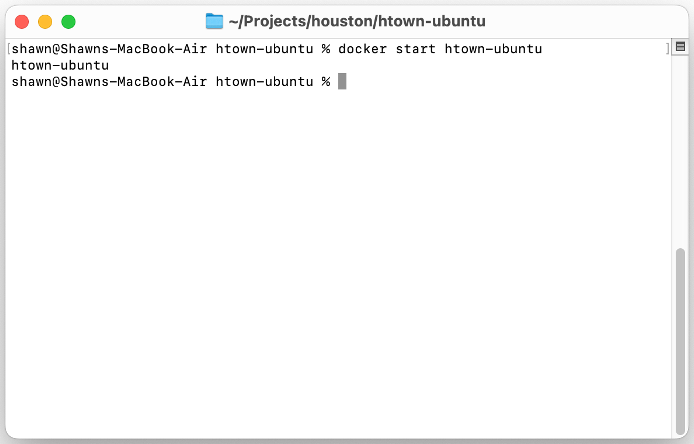

Start Container

docker start htown-ubuntu

Parameters:

start– indicates to start a containerhtown-ubuntu– name of the counter to start

On success, it lists the containers name.

Lago Vista Technologies

Lago Vista Technologies